-

[Jetson Orin Nano] Csicam과 YOLOv8을 위한 기본 세팅공부/A.I 2024. 7. 27. 20:09

기본 세팅

SDK 5.1.3 기준의 Jetson Orin Nano 보드에 다음의 내용을 세팅하는 것을 목적으로 한다.

[ OpenCV + Yolov8 + Csi Camera + Cuda + Torch ]

JetPack: 5.1.3 버전이 설치되어 있다고 가정하고 아래의 내용들을 차례로 설치해주면 된다.

OpecnCV의 경우 아래 Qengineering사이트에서 빌드 과정을 스크립트로 제공한다.

orin nano board에서 cuda가 opencv나 torch를 지원하기 위해서는 cmake를 통해 직접 빌드해주어야 한다.

또한 opencv에서 csicam을 사용하기 위해서 GStreamer 옵션을 YES로 하여 빌드해야한다.

https://galaktyk.medium.com/how-to-build-opencv-with-gstreamer-b11668fa09c

How to build OpenCV with Gstreamer

## Ubuntu

galaktyk.medium.com

설치를 위한 독스

https://qengineering.eu/install-opencv-on-orin-nano.html

Install OpenCV on Jetson Orin Nano - Q-engineering

A thorough guide on how to install OpenCV 4.8.0 on your NVIDIA Jetson Orin Nano from scratch

qengineering.eu

https://github.com/Qengineering/

Qengineering - Overview

Computer vision, Machine learning, Applied mathematics - Qengineering

github.com

https://docs.ultralytics.com/guides/nvidia-jetson/

NVIDIA Jetson

Learn to deploy Ultralytics YOLOv8 on NVIDIA Jetson devices with our detailed guide. Explore performance benchmarks and maximize AI capabilities.

docs.ultralytics.com

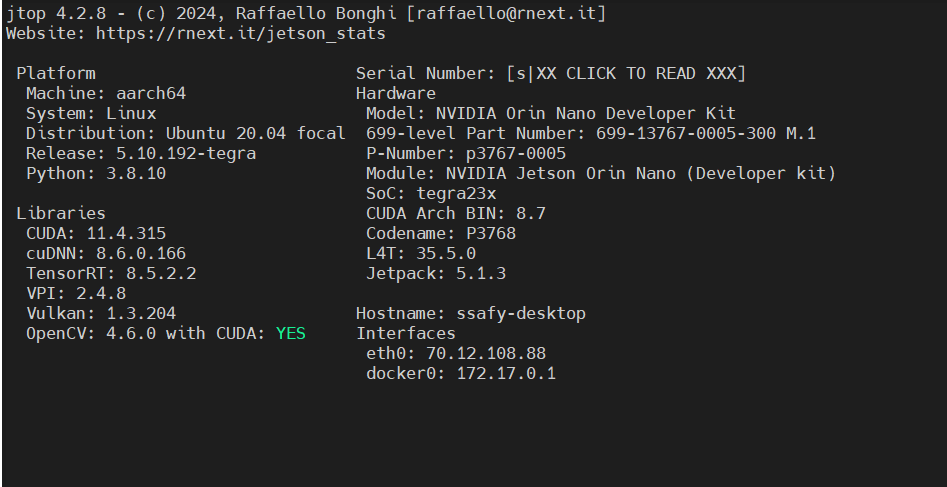

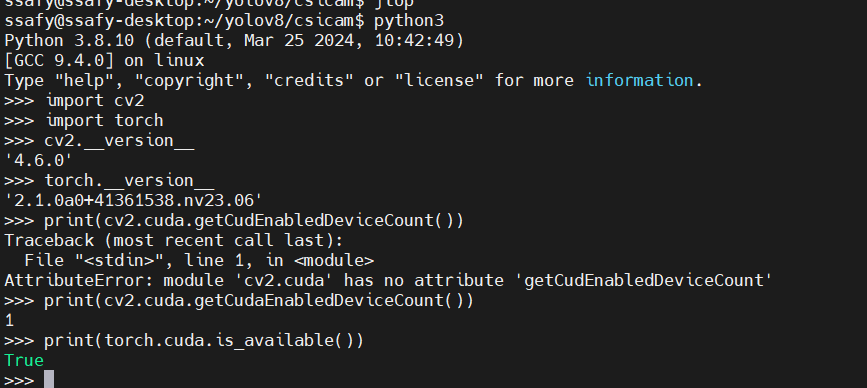

세팅 후의 버전 정보

카메라 정보

IMX219 젯슨 나노 160 광각 카메라 모듈 (젯슨 나노 보드에 연결하기 위해 pi5와 동일한 선을 추가로 구매해야 함)

https://www.devicemart.co.kr/goods/view?no=12538383

IMX219 젯슨나노 160도 광각 카메라 모듈 8MP

800만화소의 젯슨나노용 160도 광각 카메라 모듈입니다.

www.devicemart.co.kr

테스트 코드

아래는 Jetson orin nano에서의 Yolov8 모델 및 녹화, 카메라 테스트용 코드

csicam 구동을 위한 파이프라인 구축(파이프라인의 경우 적당히 변경하여 사용하면 된다.)

nvarguscamerasrc sensor-id=0 ! video/x-raw(memory:NVMM),width=1920,height=1080,framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_0 nvarguscamerasrc sensor-id=1 ! video/x-raw(memory:NVMM),width=1920,height=1080,framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_1 nvcompositor name=nvcomp sink_0::xpos=0 sink_0::ypos=0 sink_0::width=1920 sink_0::height=1080 sink_1::xpos=1920 sink_1::ypos=0 sink_1::width=1920 sink_1::height=1080 ! video/x-raw(memory:NVMM),format=RGBA,width=3840,height=1080 ! nvvidconv ! video/x-raw,format=BGRx ! videoconvert ! video/x-raw,format=BGR ! appsink drop=1

opencv를 통한 csicam 출력 기본 코드

import cv2 cap = cv2.VideoCapture("nvarguscamerasrc sensor-id=0 ! video/x-raw(memory:NVMM),width=1920,height=1080,framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_0 nvarguscamerasrc sensor-id=1 ! video/x-raw(memory:NVMM),width=1920,height=1080,framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_1 nvcompositor name=nvcomp sink_0::xpos=0 sink_0::ypos=0 sink_0::width=1920 sink_0::height=1080 sink_1::xpos=1920 sink_1::ypos=0 sink_1::width=1920 sink_1::height=1080 ! video/x-raw(memory:NVMM),format=RGBA,width=3840,height=1080 ! nvvidconv ! video/x-raw,format=BGRx ! videoconvert ! video/x-raw,format=BGR ! appsink drop=1", cv2.CAP_GSTREAMER) if not cap.isOpened(): print('Failed to open capture') exit(-1) w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) fps = float(cap.get(cv2.CAP_PROP_FPS)) print('capture opened, framing %dx%d@%f fps' % (w,h,fps)) while True: ret,frame=cap.read() if not ret: print('Failed to read from capture') exit(-2) cv2.imshow('Test', frame) cv2.waitKey(1)ultralytics YOLOv8 + csicam 사용

import datetime import cv2 from ultralytics import YOLO from helper import create_video_writer # 최소 정확도 CONFIDENCE_THRESHOLD = 0.6 GREEN = (0, 255, 0) WHITE = (255, 255, 255) coco128 = open('./coco128.txt', 'r') data = coco128.read() class_list = data.split('\n') coco128.close() model = YOLO('./yolov8n.pt') # GStreamer pipeline def gstreamer_pipeline(width=1280, height=720, out_width=640, out_height=320): return (f"nvarguscamerasrc sensor-id=0 ! video/x-raw(memory:NVMM),width={width},height={height},framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_0 nvarguscamerasrc sensor-id=1 ! video/x-raw(memory:NVMM),width={width},height={height},framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_1 nvcompositor name=nvcomp sink_0::xpos=0 sink_0::ypos=0 sink_0::width={width} sink_0::height={height} sink_1::xpos=1920 sink_1::ypos=0 sink_1::width={width} sink_1::height={height} ! video/x-raw(memory:NVMM),format=RGBA,width={width},height={height} ! nvvidconv ! video/x-raw,format=BGRx ! videoconvert ! video/x-raw,format=BGR ! appsink drop=1") def main(): #print(cv2.getBuildInformation()) # GStreamer pipeline print("cap load ... ") # source cap = cv2.VideoCapture(gstreamer_pipeline(), cv2.CAP_GSTREAMER) cap.set(cv2.CAP_PROP_FRAME_WIDTH, 640) cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 480) print("load cap") #cap = cv2.VideoCapture.open(gstreamer_pipeline(), cv2.CAP_ANY) if cap.isOpened() == False: print("can't open csi camera.") #return writer = create_video_writer(cap, "output.mp4") while True: start = datetime.datetime.now() ret, frame = cap.read() if not ret: print('Cam Error') break detection = model(frame)[0] for data in detection.boxes.data.tolist(): # data : [xmin, ymin, xmax, ymax, confidence_score, class_id] confidence = float(data[4]) if confidence < CONFIDENCE_THRESHOLD: continue xmin, ymin, xmax, ymax = int(data[0]), int(data[1]), int(data[2]), int(data[3]) label = int(data[5]) cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), GREEN, 2) cv2.putText(frame, class_list[label]+' '+str(round(confidence, 2)) + '%', (xmin, ymin), cv2.FONT_ITALIC, 1, WHITE, 2) end = datetime.datetime.now() total = (end - start).total_seconds() print(f'Time to process 1 frame: {total * 1000:.0f} milliseconds') fps = f'FPS: {1 / total:.2f}' cv2.putText(frame, fps, (10, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2) #cv2.imshow('frame', frame) writer.write(frame) if cv2.waitKey(1) == ord('q'): break cap.release() writer.release() cv2.destroyAllWindows() if __name__ == "__main__": main()녹화 기능 + YOLOv8 + csicam

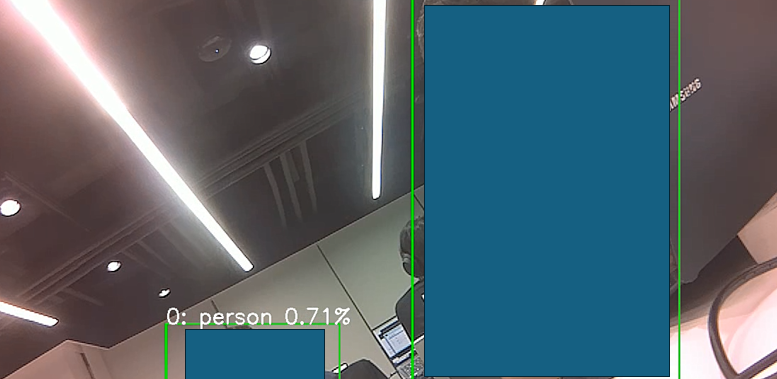

import datetime import cv2 from ultralytics import YOLO from helper import create_video_writer # 최소 정확도 CONFIDENCE_THRESHOLD = 0.6 GREEN = (0, 255, 0) WHITE = (255, 255, 255) coco128 = open('./coco128.txt', 'r') data = coco128.read() class_list = data.split('\n') coco128.close() model = YOLO('./yolov8n.pt') # GStreamer pipeline def gstreamer_pipeline(width=1280, height=720, out_width=640, out_height=320): return (f"nvarguscamerasrc sensor-id=0 ! video/x-raw(memory:NVMM),width={width},height={height},framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_0 nvarguscamerasrc sensor-id=1 ! video/x-raw(memory:NVMM),width={width},height={height},framerate=21/1,format=NV12 ! nvvidconv ! video/x-raw(memory:NVMM),format=RGBA ! queue ! nvcomp.sink_1 nvcompositor name=nvcomp sink_0::xpos=0 sink_0::ypos=0 sink_0::width={width} sink_0::height={height} sink_1::xpos=1920 sink_1::ypos=0 sink_1::width={width} sink_1::height={height} ! video/x-raw(memory:NVMM),format=RGBA,width={width},height={height} ! nvvidconv ! video/x-raw,format=BGRx ! videoconvert ! video/x-raw,format=BGR ! appsink drop=1") def main(): #print(cv2.getBuildInformation()) # GStreamer pipeline print("cap load ... ") # source cap = cv2.VideoCapture(gstreamer_pipeline(), cv2.CAP_GSTREAMER) cap.set(cv2.CAP_PROP_FRAME_WIDTH, 640) cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 480) print("load cap") #cap = cv2.VideoCapture.open(gstreamer_pipeline(), cv2.CAP_ANY) if cap.isOpened() == False: print("can't open csi camera.") #return writer = create_video_writer(cap, "fin_output.mp4") while True: start = datetime.datetime.now() ret, frame = cap.read() if not ret: print('Cam Error') break detection = model(frame)[0] for data in detection.boxes.data.tolist(): # data : [xmin, ymin, xmax, ymax, confidence_score, class_id] confidence = float(data[4]) if confidence < CONFIDENCE_THRESHOLD: continue xmin, ymin, xmax, ymax = int(data[0]), int(data[1]), int(data[2]), int(data[3]) label = int(data[5]) cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), GREEN, 2) cv2.putText(frame, class_list[label]+' '+str(round(confidence, 2)) + '%', (xmin, ymin), cv2.FONT_ITALIC, 1, WHITE, 2) end = datetime.datetime.now() total = (end - start).total_seconds() print(f'Time to process 1 frame: {total * 1000:.0f} milliseconds') fps = f'FPS: {1 / total:.2f}' cv2.putText(frame, fps, (10, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2) cv2.imshow('frame', frame) writer.write(frame) if cv2.waitKey(1) == ord('q'): print("end!!!") break cap.release() writer.release() cv2.destroyAllWindows() print("program exit...") if __name__ == "__main__": main()실행 결과 ( 조원들 보호를 위해... 암튼 잘 나옴)

'공부 > A.I' 카테고리의 다른 글

[YOLOv8] Hyperparameter Tuning (0) 2024.07.27 [YOLOv8] 라벨링부터 모델 테스트까지 (0) 2024.07.27 [Google Teachable Machine] 마스크 착용 감지 프로그램 (0) 2021.09.12 Activation function(활성화 함수) (0) 2021.08.10 AdaBoost (0) 2021.08.07